If it matters to you whether a new drug will cure you or leave you debilitating side effects, if it's sensible to produce legislation to prevent climate change, or if drinking coffee will your raise blood pressure - it pays to know what the facts are. How do we find out what the facts* are? Usually scientific studies are the best place to look. But we've all heard about the replication crisis, we know that researchers can sometimes make mistakes, and there are some fields (like nutrition) that seem to produce contradictory results every other month. So how do we separate scientific fact from fiction?

I don't really have a great answer to this question. At a first pass, of course, the solution is to use Bayesian reasoning. Have some prior belief about the truth of the hypothesis and then update that belief according to the probability of the evidence given the hypothesis. In practice, though, this is much easier said than done because we often don't have a good estimate of the prior likelihood of the hypothesis and it's also difficult to judge the probability of the evidence given the hypothesis. As I see it, the latter problem is twofold.

- Studies don't directly tell you the probability of the evidence given the hypothesis. Instead, they give you p-values, which tell you the probability of the evidence given the null hypothesis. But there are many possible null hypotheses, such as the evidence being accounted for by a different variable that the authors didn't think of. This is why scientists do controls to rule out alternative hypotheses, but it's hard to think of every possible control.

- The evidence in the study isn't necessarily "true" evidence. You have to trust that the scientists collected the data faithfully, did the statistics properly, and that the sample from which they collected data is a representative sample**.

In theory, the only strategy here is to be super-duper critical of everything, try to come up with every possible alternative hypothesis, and recalculate all the statistics yourself to make sure that they weren't cheating. And then replicate the experiment yourself. But, as a wise woman once said, "ain't nobody got time for that." It goes without saying that if the truth of a particular claim matters a lot to you, you should invest more effort into determining its veracity. But otherwise, in most low-stakes situations (e.g. arguing with people on Facebook) you're not going to want to do that kind of legwork. Instead, it's best to have a set of easy-to-apply heuristics that mostly work most of the time, so that you can "at a glance" decide whether something is believable or not. So I've come up with a list of heuristics that I use (sorta kinda, sometimes) to quickly evaluate whether a study is believable.

- Mind your priors. The best way to know whether a study is true or not is to have a pretty good idea of whether the claim is true or not before reading the study. This is kind of hard to do if it's a study outside of your field, but if it does happen to be in your field, you should have a pretty good idea of what kind of similar work has been done before reading the study. This can give you a pretty good idea going in of how believable the claim is. If you've been part of a field for a while, you develop an sixth sense (seventh if you're a buddhist) or a kind of intuition for what things sound plausible. At the same time...

- Beware of your own confirmation bias. Don't believe something just because you want it to be true or because it confirms your extant beliefs or (especially) political views. And don't reject something because it argues against your extant beliefs or political views. Don't engage in isolated demands for rigor. If you know that you have a pre-existing view supporting the hypothesis, push your brain as hard as you can to criticize the evidence. If you know that you have a pre-existing view rejecting the hypothesis, push your brain as hard as you can to defend the evidence.

- Beware of your own experience bias: Your personal experience is a highly biased sample. Don't disbelieve a high-powered study (with a large and appropriately drawn sample) simply because it contradicts your experience. On the other hand, your personal experience can be a good metric for how things work in your immediate context. If a drug works for 90% of people and you try it and it doesn't work for you, it doesn't work for you. At the same time, be careful, because people don't necessarily quantify their personal experience correctly.

- Mind your sources. Believability largely depends on trust, so if you are familiar with the authors and think they do usually do good work, be trusting, if you don't know the authors, rely on other things. My experience is that university affiliation doesn't necessarily matter that much, don't be overly impressed that a study came out of Harvard. In terms of journals: peer-reviewed academic journals are best, preferably high-tier and highly cited but I think that probably gives you diminishing returns. High-tier journals often focus on exciting claims, not necessarily the best-verified ones. Unpublished or unreviewed non-partisan academic stuff is also at least worthwhile to look at and should be given some benefit of the doubt. Then comes partisan academish sources, like think tanks which promote a particular agenda but are still professional and know what they're doing. You should be more skeptical of these kinds of studies, but you shouldn't reject them outright. Be skeptical if they find evidence in favor of their preferred hypothesis, be more trusting if their evidence is seemingly against their preferred hypothesis.

Don't trust non-professionals, including journalists, especially highly. Training exists for a reason. Some publications like The Economist seem to have some people there who know what they're doing in terms of data science and visualization. Some individual journalists can be relied upon to accurately report findings of trained scientists. The best way to do source criticism is to weight sources by how close to the truth they've been in the past, like the Nate Silver and the Five Thirty-Eight blog does with political polling. Don't trust politicians citing studies. Ever. Doesn't matter if they're from your tribe or not. Same thing goes for political memes, chain emails forwarded from grandma, etc. You are not going to get good information from people who care about partisan goals more than a dispassionate understanding of truth. And sure, everyone is biased a bit, but there are levels, man. - Replication. The obvious one. If a bunch of people have done it a bunch of times and gotten the same results, believe it. If a bunch of people have done it a bunch of times and gotten different results, assume regression to the mean. If a bunch of people have done it a bunch of times and got the opposite results of the study you're looking at, the study you're looking at is probably wrong.

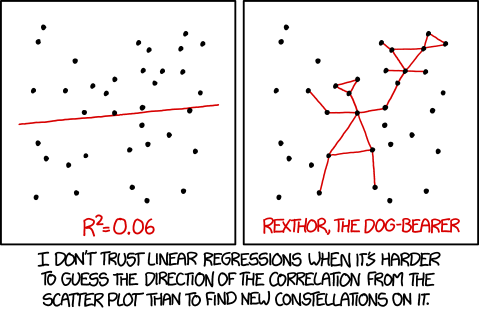

- Eyeball the figures. If the central claim of a study is easily born out or contradicted from a glance at the graphs, don't worry too much about p values or whatnot. This won't always work, but we're aiming for a "probably approximately correct" framework here, and a quick glance at figures will usually tell you if a claim is qualitatively believable.

- Different fields have different expectations of reliability. Some fields produce a lot more sketchy research than others. Basic low-level science like cell biology tends to have fairly rigorous methodology and a lot of results are in the form of "we looked in a microscope and saw this new thing we didn't see before." So there's usually no real reason to disbelieve that sort of thing. Most of the experimental results in my field (dendritic biophysics) are more or less of that nature, and I tend to believe experimentalists. Fields like psychology, nutrition, or drug research are more high-variance. People can be very different in their psychological makeup or microbiome, so even if someone did the most methodologically rigorous study in the field it might not generalize to the overall population. Simple systems tend to be amenable to scientific induction (an electron is an electron is an electron) it's harder to generalize from one complex system (like a whole person or a society) to other complex systems. That doesn't mean all findings in psychology or nutrition are wrong; I would just put less confidence in them. That being said, some fields involving complex systems (like election polling) are basically head-counting problems. I tend to believe this kind of data within some margin of error. This is because on average it tends to be more-or-less reliable on average and because...

- Bad data is better than no data. If you have a question and there's only one study that tries to answer the question, unless you have a really good reason to have an alternative prior, use the study as an anchor, even if it's sketchy. That is, unless it has glaring flaws that render it completely useless. The value of evidence is not a binary variable of "good" or "not good", it's a number on a continutious spectrum between 0 and 1 between "correlated with reality" and "not correlated with reality". So use the data that you have until something better comes along.

- The test tests what the test tests. If the central claim of a study does not seem to be prima facie falsifiable by the experiment that they did, then run in the other direction. I tend to have faith in academia, but sometimes people do design studies that don't don't demonstrate anything. Moreover, a lot of studies are taken further than they are meant to be. Psychology studies can inform policy, but don't expect to be able to always draw a line from a psychology experiment done in a laboratory to policy. Policy interventions based on psychology need to be tested and evaluated as policy interventions in the real world (Looking at you, IAT). Drug studies on rats need to be carried out on humans. Etc. Popular media often sensationalizes scientific results, which are usually limited in scope.

- Not all mistakes are invalidating. Scientists are obsessive about getting things right, because we have to defend our claims to our peers. Nevertheless, science is hard and we sometimes make mistakes. That's life. A single mistake in a study doesn't mean that the study is entirely wrong. The scope of a mistake is limited to the scope of the mistake. Some mistakes will invalidate everything, some won't. Often a qualitative result will hold even if someone messed up the statistics a bit. It's easy to point out mistakes; the challenge is to extract a signal of truth from the noise of human fallibility.

- When in doubt, remember Bayes. Acquire evidence, update beliefs according to reliability of evidence. The rest is commentary.

* In science, at least in my field, we hardly ever use the word "fact"; we prefer to talk about evidence. Still, there are findings that are so well-established that no one questions them. Discussions at scientific conferences usually revolve around issues that haven't been settled yet, so scientific conferences are ironically fact-free zones.

** "Sample size" is often not the issue (that's the classic low-effort criticism from non-scientists about scientific studies, which is sometimes valid but usually the standard statistical measures take that into account; if your sample size is too small you won't get reasonable p-values). Rather, even if your sample size is large enough to produce a statistically significant effect for your particular test sample, you have to be wary of generalizing from your test population to the general population (sampling bias). If the two populations are "basically the same" - that is, your experimental population was uniformly sampled from the broader population - then you can use the standard sampling error metric to estimate how far off your results are for the general population. But if your test population is fundamentally different than the general population (i.e. you ran all your experiments on white undergraduate psychology students) there's reason to be skeptical that the results will generalize for the broader population.

No comments:

Post a Comment